This posting documents my experience at the 2011 Code4Lib Conference, February 8-10 in Bloomington (Indiana). In a sentence, the Conference was well-organized, well-attended, and demonstrated the over-all health and vitality of this loosely structured community. At the same time I think the format of the Conference will need to evolve if it expects to significantly contribute to the library profession.

student center |

computers |

Code4Libbers |

Day #1 (Tuesday, February 8)

The Conference officially started on Tuesday, February 8 after the previous day’s round of pre-conference activities. Brad Wheeler (Indiana University) gave the introductory remarks. He alluded to the “new normal”, and said significant change only happens when there are great leaders or financial meltdowns such as the one we are currently experiencing. In order to find stability in the current environment he advocated true dependencies and collaborations, and he outlined three tensions: 1) innovation versus solutions at scale, 2) local-ness and cloudiness, and 3) propriety verus open. All of these things, he said, are false dichotomies. “There needs to be a balance and mixture of all these tension.” Wheeler used his experience with Kuali as an example and described personal behavior, a light-weight organization, and local goals as the “glue” making Kuali work. Finally, he said the library community needs to go beyond “toy” projects and create something significant.

The keynote address, Critical collaborations: Programmers and catalogers? Really?, was given by Diane Hillman (Metadata Management). In it she advocated greater collaboration between the catalogers and coders. “Catalogers and coders do not talk with each other. Both groups get to the nitty-gritty before their is an understanding of the problem.” She said change needs to happen, and it should start within our own institutions by learning new skills and having more cross-departmental meetings. Like Wheeler, she had her own set of tensions: 1) “cool” services versus the existing online public access catalog, and 2) legacy data versus prospective data. She said both communities have things to learn from each other. For example, catalogers need to learn to use data that is not created by catalogers, and catalogers need not always look for leadership from “on high”. I asked what the coders needed to learn, but I wasn’t sure what the answer was. She strongly advocated RDA (Resource Description and Access), and said, “It is ready.” I believe she was looking to the people in the audience as people who could create demonstration projects to show to the wider community.

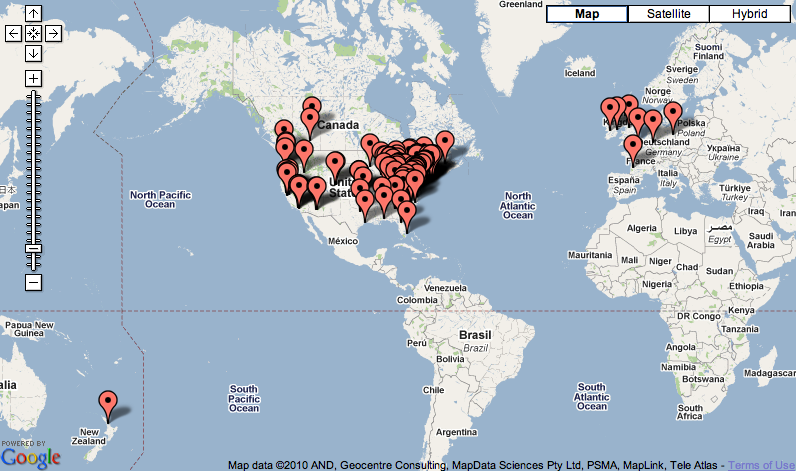

Karen Coombs (OCLC) gave the next presentation, Visualizing library data. In it she demonstrated a number of ways library information can be graphed through the use of various mash-up technologies: 1) a map of holdings, 2) QR codes describing libraries, 3) author timelines, 4) topic timelines, 5) FAST headings in a tag cloud, 6) numbers of libraries, 7) tree relationships between terms, and 8) pie charts of classifications. “Use these things to convey information that is not a list of words”.

In Hey, Dilbert, where’s my data?”, Thomas Barker (University of Pennsylvania) described how he is aggregating various library data sets into a single source for analysis — http://code.google.com/p/metridoc/

Tim McGeary (Lehigh University) shared a Kuali update in Kuali OLE: Architecture of diverse and linked data. OLE (Open Library Environment) is the beginnings of an open source library management system. Coding began this month (February) with goals to build community, implement a “next-generation library catalog”, re-examine business operations, break away from print models of doing things, create an enterprise-level system, and reflect the changes in scholarly work. He outlined the structure of the system and noted three “buckets” for holding different types of content: 1) descriptive — physical holdings, 2) semantic — conceptual content, and 3) relational — financial information. They are scheduled to release their first bits of code by July.

Cary Gordon (The Cherry Hill Company) gave an overview of Drupal 7 functionality in Drupal 7 as a rapid application development tool. Of most interest to me was the Drupal credo, “Sacrifice the API. Preserve the data.” In the big scheme of things, this makes a lot of sense to me.

After lunch first up was Josh Bishoff (University of Illinois) with Enhancing the mobile experience: mobile library services at Illinois. The most important take-away was the importance between a mobile user experience and a desktop user experience. They are not the same. “This is not a software problem but rather an information architecture problem.”

Scott Hanrath (University of Kansas) described his participation in the development of Anthologize in One week, one tool: Ultra-rapid open sources development among strangers. He enumerated the group’s three criteria for success: 1) usefulness, 2) low walls & high ceilings, and 3) feasibility. He also attributed the project’s success to extraordinary outreach efforts — marketing, good graphic design, blurbs, logos, etc.

cabin |

graveyard |

chruch |

VuFind beyond MARC: Discovering everything else by Demian Katz (Villanova University) described how VuFind supports the indexing of non-MARC metadata through the use of “record drivers”. Acquire metadata. Map it to Solr fields. Index it while denoting it as a special metadata type. Search. Branch according to metadata type. Display. He used Dublin Core OAI-PMH metadata as an example.

The last formal presentation of the day was entitled Letting in the light: Using Solr as an external search component by Jay Luker and Benoit Thiell (Astrophysics Data System). ADS is a bibliographic information system for astronomers. It uses a pre-print server originally developed at CERN. They desired to keep much of the functionality of the original server as possible but enhance it with Solr indexing. They described how they hacked the two systems to allow the searching and retrieving of millions of records at a time. Of all the presentations at the Conference, this one was the most computer science-like.

The balance of the day was given over to breakout sessions, lightning talks, a reception in the art museum, and craft beer drinking in the hospitality suite. Later that evening I retired to my room and hacked on Twitter feeds. “What do library programmers do for a good time?”

Day #2 (Wednesday, February 9)

The next day began with a presentation by my colleagues at Notre Dame, Rick Johnson and Dan Brubakerhorst. In A Community-based approach to developing a digital exhibit at Notre Dame using the Hydra Framework, they described how they are building and maintaining a digital library framework based on a myriad of tools: Fedora, Active Fedora, Solr, Hydrangia, Ruby, Blacklight. They gave examples of ingesting EAD files. They are working on an ebook management application. Currently they are building a digitized version of city plans.

I think the most inspiring presentation was by Margaret Heller (Dominican University) and Nell Tayler (Chicago Underground) called Chicago Underground Library’s community-based cataloging system. Tayler began and described a library of gray literature. Poems. Comics. All manner of self publications were being collected and loosely cataloged in order to increase the awareness of the materials and record their existence. The people doing the work have little or no cataloging experience. They decided amongst themselves what metadata they were going to use. They wanted to focus on locations and personal characteristics of the authors/publishers of the material. They whole thing reminded me of the times I suggested cataloging local band posters because somebody will find everything interesting at least once.

Gabriel Farrell (Drexel University) described the use of a non-relational database called CouchDB in Beyond sacrilege: A CouchApp catalog. With a REST-ful interface, complete with change log replication and different views, CouchApp seems to be cool as well as “kewl”.

Matt Zumwalt (MediaShelf) in Opinionated metadata: Bringing a bit o sanity to the world of XML metdata described OM which looked like a programatic way of working with XML in Ruby but I thought his advice on how to write good code was more interesting. “Start with people’s stories, not the schema. Allow the vocabulary to reflect the team. And talk to the other team members.”

Ben Anderson (eXtensible Catalog) in Enhancing the performance of extensibility of XC’s metadata services toolkit outlined the development path and improvements to the Metadata Services Toolkit (MST). He had a goal of making the MST faster and more robust, and he did much of this by taking greater advantage of MySQL as opposed to processing various things in Solr.

wires |

power supply |

water cooler |

In Ask Anything! a.k.a. the ‘Human Search Engine moderated by Dan Chudnov (Library of Congress) a number of people stood up, asked the group a question, and waited for an answer. The technique worked pretty well and enabled many people to identify many others who: 1) had similar problems, or 2) offered solutions. For better or for worse, I asked the group if they had any experience with issues of data curation, and I was “rewarded” for my effort with the responsibility to facilitate a birds-of-a-feather session later in the day.

Standing in for Mike Grave, Tim Shearer (University of North Carolina at Chapel Hill) presented GIS on the cheap. Using different content from different sources, Grave is geo-tagging digital objects by assigning them latitudes and longitudes. Once this is done, his Web interfaces read the tagging and place the objects on a map. He is using a Javascript library called Open Layers for the implementation.

In Let’s get small: A Microservices approach to library websites by Sean Hannan (Johns Hopkins University) we learned how a myriad of tools and libraries are being used by Hannan to build websites. While the number of tools and libraries seemed overwhelming I was impressed at the system’s completeness. He was practicing the Unix Way when it comes to website maintenance.

When a person mentions the word “archives” at a computer conference, one of the next words people increasingly mention is “forensics”, and Mark Matienzo (Yale University) in Fiwalk with me: Building emergent pre-ingest workflows for digital archival records using open source-forensic software described how he uses forensic techniques to read, organize, preserve digital media — specifically hard drives. He advocated a specific workflow for doing his work, a process for analyzing the disk’s content with a program called Gumshoe, and Advanced Forensic Framework 4 (AFF4) for doing forensics against file formats. Ultimately he hopes to write an application binding the whole process together.

I paid a lot of attention to David Lacy (Villanova University) when he presented (Yet another) home-grown digital library system, built upon open source XML technologies and metadata standards because the work he has done directly effects a system I am working on colloquially called the “Catholic Portal”. In his system Lacy described a digital library system complete with METS files, a build process, an XML database, and an OAI-PMH server. Content is digitized, described, and ingested into VuFind. I feel embarrassed that I had not investigated this more thoroughly before.

Break-out (birds-of-a-feather) sessions were up next and I facilitated one on data curation. Between ten and twelve of us participated, and in a nutshell we outlined a whole host of activities and issues surrounding the process of data management. After listing them all and listening to the things discussed more thoroughly by the group I was able to prioritize. (“Librarians love lists.”) At the top was, “We won’t get it right the first time”, and I certainly agree. Data management and data curation are the new kids on the block and consequently represent new challenges. At the same time, our profession seems obsessed with the creation of processes, implementations, and not evaluating the processes as needed. In our increasingly dynamic environment, such a way of thinking is not feasible. We will have to practice. We will have to show our ignorance. We will have to experiment. We will have to take risks. We will have to innovate. All of these things assume imperfection from the get go. At the same time the issues surrounding data management have a whole lot in common with issues surrounding just about any other medium. The real challenge is the application of our traditional skills to the current environment. A close second in the priorities was the perceived need for cross-institutional teams — groups of people including the office of research, libraries, computing centers, legal counsel, and of course researchers who generate data. Everybody has something to offer. Everybody has parts of the puzzle. But no one has all the pieces, all the experience, nor all the resources. Successful data management projects — defined in any number of ways — require skills from across the academe. Other items of note on the list included issues surrounding: human subjects, embargoing, institution repository versus discipline repositories, a host of ontologies, format migration, storage and back-up versus preservation and curation, “big data” and “little data”, entrenching one’s self in the research process, and unfunded mandates.

text mining |

As a part of the second day’s Lighting Talks I shared a bit about text mining. I demonstrated how the sizes of texts — measured in words — could be things we denote in our catalogs thus enabling people to filter results in an additional way. I demonstrated something similar with Fog, Flesch, and Kincaid scores. I illustrated these ideas with graphs. I alluded to the “colorfulness” of texts by comparing & contrasting Thoreau with Austen. I demonstrated the idea of “in the same breath” implemented through network diagrams. And finally, I tried to describe how all of these techniques could be used in our “next generation library catalogs” or “discovery systems”. The associated video, here, was scraped from the high quality work done by the University of Indiana. “Thanks guys!”

At the end of the day we were given the opportunity to visit the University’s data center. It sounded a lot like a busman’s holiday to me so I signed up for the 6 o’clock show. I got on the little bus with a few other guys. One was from Australia. Another was from Florida. They were both wondering whether or not the weather was cold. It being around 10° Fahrenheit I had to admit it was. The University is proud of their data center. It can withstand tornado-strength forces. It is built into the side of a hill. It is only have full, if that, which is another way of saying, “They have a lot of room to expand.” We saw the production area. We saw the research area. I was hoping to see lots of blinking lights and colorful, twisty cables, but the lights were few and the cables were all blue. We saw Big Red. I wanted to see where the network came in. “It is over there, in that room”. Holding up my hands I asked, “How big is the pipe?”. “Not very large,” was the reply, “and the fiber optic cable is only the size of a piece of hair.” It thought the whole thing was incongruous. All this infrastructure and it literally hangs on the end of a thread. One of the few people I saw employed by the data center made a comment while I was taking photographs. “Those are the nicest packaged cables you will ever see.” She was very proud of her handiwork, and I was happy to take a few pictures of them.

Big Red |

generator |

wires |

Day #3 (Thursday, February 10)

The last day of the conference began with a presentation by Jason Casden and Joyce Chapman (North Carolina State University Libraries) with Building a open source staff-facing tablet app for library assessment. In it they first described how patron statistics were collected. Lots of paper. Lots of tallies. Lots of data entry. Little overall coordination. To resolve this problem they created a tablet-based tool allowing the statistics collector to roam through the library, quickly tally how many people were located where and doing what, and update a centralized database rather quickly. Their implementation was an intelligent use of modern technology. Kudos.

Ian Mulvany (Medeley) was a bit of an entrepreneur when he presented Medeley’s API and university libraries: Three example to create value on behalf of Jan Reichelt. His tool, Medeley, is intended to solve real problems for scholars: making them more efficient as writers, and more efficient as discoverers. To do this he provides a service where PDF files are saved centrally, analyzed for content, and enhanced through crowd sourcing. Using Medeley’s API things such as reading lists, automatic repository deposit, or “library dashboard” applications could be written. As of this writing Medeley is sponsoring a contest with cash prizes to see who can create the most interesting application from their API. Frankly, the sort of application described by Reichelt is the sort of application I think the library community should have created a few years ago.

In Practical relevancy testing, Naomi Dushay (Stanford University) advocated doing usability testing against the full LAMP stack. To do this she uses a program called Cucumber to design usability tests, run them, look at the results, adjust software configurations, and repeat.

Kevin Clarke (NESCent) in Sharing between data repositories first compared & contrasted two repository systems: Dryad and TreeBase. Both have their respective advantages & disadvantages. As a librarian he understands why it is good idea to have the same content in both systems. To this end he outlined and described how such a goal could be accomplished using a file packaging format called BagIt.

The final presentation of the conference was given by Eric Hellman (Gluejar, Inc) and called Why (Code4) libraries exist. In it he posited that more than half of the books sold in the near future will be in ebook format. If this happens, then, he asked, will libraries become obsolete? His answer was seemingly both no and yes. “Libraries need to change in order to continue to exists, but who will drive this change? Funding agencies? Start-up companies? Publishers? OCLC? ILS vendors?” None of these things, he says. Instead, it may be the coders but we (the Code4Lib community) have a number of limitations. We are dispersed, poorly paid, self-trained, and too practical. In short, none of the groups he outlined entirely have what it takes to keep libraries alive. On the other hand, he said, maybe libraries are not really about books. Instead, maybe, they are about space, people, and community. In the end Hellman said, “We need to teach, train, and enable people to use information.”

conference center |

bell |

hidden flywheel |

Summary

All in all the presentations were pretty much what I expected and pretty much what was intended. Everybody was experiencing some sort of computing problem in their workplace. Everybody used different variations of the LAMP stack (plus an indexer) to solve their problems. The presenters shared their experience with these solutions. Each presentation was like variations of a 12-bar blues. A basic framework is assumed, and the individual uses the framework to accomplish to create beauty. If you like the idea of the blues framework, then you would have liked the Code4Lib presentations. I like the blues.

In the past eight months I’ve attended at least four professional conferences: Digital Humanities 2010 (July), ECDL 2010 (September), Data Curation 2010 (December), and Code4Lib 2011 (February). Each one had about 300 people in attendance. Each one had something to do with digital libraries. Two were more academic in nature. Two were more practical. All four were communities unto themselves; at each conference there were people of the in-crowd, new comers, and folks in between. Many, but definitely not most, of the people I saw were a part of the other conferences but none of them were at all four. All of the conferences shared a set of common behavioral norms and at the same time owned a set of inside jokes. We need to be careful and not go around thinking our particular conference or community is the best. Each has something to offer the others. I sincerely do not think there is a “best” conference.

The Code4Lib community has a lot to offer the wider library profession. If the use of computers in libraries is only going to grow (which is an understatement), then a larger number of people who practice librarianship will need/want to benefit from Code4Lib’s experience. Yet the existing Code4Lib community is reluctant to change the format of the conference to accomodate a greater number of people. Granted, larger numbers of attendees make it more difficult to find venues, enable a single shared conference experience, and necessitates increased governance and bureaucracy. Such are the challenges of a larger group. I think the Code4Lib community is growing and experiencing growing pains. The mailing list increases by at least one or two new subscribers every week. The regional Code4Lib meetings continue. The journal is doing just fine. Code4Lib is a lot like the balance of the library profession. Practical. Accustomed to working on a shoe string. Service oriented. Without evolving in some way, the knowledge of Code4Libbers is not going to have a substancial effect on the wider library community. This makes me sad.

Next year’s conference — Code4Lib 2012 — will be held in Seattle (Washington). See you there?

wires |

self-portrait |

Dan Marmion recruited and hired me to work at the University of Notre Dame during the Summer of 2001. The immediate goal was to implement a “database-driven website”, which I did with the help of the Digital Access and Information Architecture Department staff and MyLibrary.

Dan Marmion recruited and hired me to work at the University of Notre Dame during the Summer of 2001. The immediate goal was to implement a “database-driven website”, which I did with the help of the Digital Access and Information Architecture Department staff and MyLibrary.

I had the recent honor, privilege, and pleasure of attending

I had the recent honor, privilege, and pleasure of attending

This is the briefest of travelogues describing my experience at the 2010 ALA Annual Meeting in Washington (DC).

This is the briefest of travelogues describing my experience at the 2010 ALA Annual Meeting in Washington (DC).

On Thursday and Friday, April 29 and 30, 2010 I attended a

On Thursday and Friday, April 29 and 30, 2010 I attended a  This posting describes the whys and wherefores behind the

This posting describes the whys and wherefores behind the

Infomotions has a shiny new website, and the process to create it was not too difficult.

Infomotions has a shiny new website, and the process to create it was not too difficult. My ultimate solution is rooted in jQuery and its canned themes.

My ultimate solution is rooted in jQuery and its canned themes.